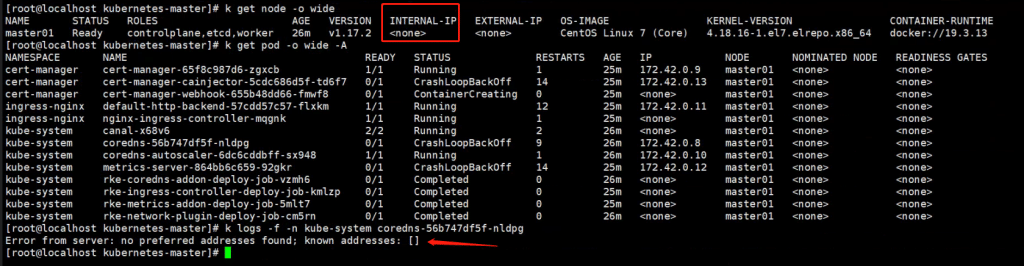

The Kubernetes was not able to work perfectly after being deployed with the RKE tool. it has no internal IP showing when performed

kubectl get node -o wide -A

Some pods were running but no pod IP, some pods kept restarting, like CoreDNS, Nginx-ingress-controller and few logs were printed out.

At first, I thought there might be something wrong in the RKE configuration file – Cluster.yaml, but after checking again and again, everything seems to be perfect, and performed new installing, it still got the same result.

Checked the API-Server log, the message showed that it was no internal IP rather than a display error.

apiserver received an error that is not an metav1.Status: &node.NoMatchError{addresses:[]v1.NodeAddress(nil)}

So I continue to dig Kubelet log, then I found some useful information.

Container runtime network not ready: NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized

failed to get node "master01" when trying to set owner ref to the node lease: nodes "master01" not found

Failed to set some node status fields: can't get ip address of node master01. error: no default routes found in "/proc/net/route" or "/proc/net/ipv6_route"

The above message indicates “no default Routers found in /proc/net/route or “/proc/net/ipv6_route”, after checking the default router on the OS, No default route, indeed!

meanwhile, I checked the network interface configuration file, Gateway missing.

I’m quite sure this was caused by no default route, the root cause was a network configuration.

Finally, I updated the configuration file and restart the network, the Kubernetes started working and all pods run properly.